vidformer

A research project for accelerating video/data visualization. See the preprint on arXiv for details.

Developed by the OSU Interactive Data Systems Lab.

Quick Start

To quickly try out Vidformer you can:

- try the online Vidformer Playground

Or, you can deploy it yourself:

git clone https://github.com/ixlab/vidformer

cd vidformer

docker build -t igni -f Dockerfile .

docker-compose -f vidformer-igni/docker-compose-local.yaml up

You can find details on this in our Getting Started Guide.

Why vidformer

Vidformer efficiently transforms videos, enabling faster annotation, editing, and processing of video data—without having to focus on performance. Just swap import cv2 with import vidformer.cv2 as cv2 to see video outputs instantly.

Vidformer uses a declarative specification format to represent transformations. This enables:

-

Transparent Optimization: Vidformer optimizes the execution of declarative specifications just like a relational database optimizes relational queries.

-

Lazy/Deferred Rendering: Video results can be retrieved on-demand, allowing for practically instantaneous playback of video results.

Vidformer usually renders videos 2-3x faster than cv2, and hundreds of times faster (practically instantly) when serving videos on-demand.

Vidformer builds on open technologies you may already use:

- OpenCV: A

cv2-compatible interface ensures both you (and LLMs) can use existing knowledge and code. - Supervision: Supervision-compatible annotators make visualizing computer vision models trivial.

- FFmpeg: Built on the same libraries, codecs, and formats that run the world.

- Jupyter: View transformed videos instantly right in your notebook.

- HTTP Live Streaming (HLS): Serve transformed videos over a network directly into any media player.

- Apache OpenDAL: Access source videos no matter where they are stored.

Documentation

About the project

Cite:

@misc{winecki2026_vidformer,

title={Vidformer: Drop-in Declarative Optimization for Rendering Video-Native Query Results},

author={Dominik Winecki and Arnab Nandi},

year={2026},

eprint={2601.17221},

archivePrefix={arXiv},

primaryClass={cs.DB},

url={https://arxiv.org/abs/2601.17221},

}

File Layout:

- ./vidformer: The core rendering library (Rust)

- ./vidformer-py: The Python frontend

- ./vidformer-igni: The vidformer server

- ./docs: The project docs

Vidformer components are detailed here.

License: Vidformer is open source under Apache-2.0. Contributions are welcome.

Acknowledgements: Vidformer is based upon work supported by the National Science Foundation under Awards #2118240 and #1910356.

Getting Started

The fastest way to try vidformer is with the hosted demo:

For local development, see Local Install, then follow the cv2 walkthrough.

Local Install

You can deploy the server locally with docker:

git clone https://github.com/ixlab/vidformer

cd vidformer

docker build -t igni -f Dockerfile .

docker-compose -f vidformer-igni/docker-compose-local.yaml up

vidformer-py can be installed with pip:

pip3 install vidformer

There are two ways to connect the client to the server. Either use the environment variables printed out by the server or set it manually:

import vidformer as vf

import vidformer.cv2 as cv2

cv2.set_server(vf.Server("<ENDPOINT>", "<API_KEY>"))

Run admin commands

Admin commands can be run from inside the server container:

docker-compose -f vidformer-igni/docker-compose-local.yaml exec igni bash

vidformer-igni user ls

Run vidformer-igni --help for other commands.

Getting Started - cv2

This is a walkthrough of getting started with the vidformer OpenCV cv2 compatibility layer.

Installation

Hello, world!

Copy in your video, or use ours:

curl -O https://f.dominik.win/data/dve2/tos_720p.mp4

Then just replace import cv2 with import vidformer.cv2 as cv2.

Here’s our example script:

import vidformer.cv2 as cv2

cap = cv2.VideoCapture("tos_720p.mp4")

fps = cap.get(cv2.CAP_PROP_FPS)

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter("output.mp4", cv2.VideoWriter_fourcc(*"mp4v"),

fps, (width, height))

while True:

ret, frame = cap.read()

if not ret:

break

cv2.putText(frame, "Hello, World!", (100, 100), cv2.FONT_HERSHEY_SIMPLEX,

1, (255, 0, 0), 1)

out.write(frame)

cap.release()

out.release()

Stream the Results

Saving videos to disk works, but we can also display them in the notebook. Since we stream the results and only render them on demand this can start practically instantly!

First, replace "output.mp4" with None to skip writing the video to disk.

Then you can use cv2.vidplay() to play the video!

import vidformer.cv2 as cv2

cap = cv2.VideoCapture("tos_720p.mp4")

fps = cap.get(cv2.CAP_PROP_FPS)

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

out = cv2.VideoWriter(None, cv2.VideoWriter_fourcc(*"mp4v"),

fps, (width, height))

while True:

ret, frame = cap.read()

if not ret:

break

cv2.putText(frame, "Hello, World!", (100, 100), cv2.FONT_HERSHEY_SIMPLEX,

1, (255, 0, 0), 1)

out.write(frame)

cap.release()

out.release()

cv2.vidplay(out)

⚠️ By default

cv2.vidplay()will display a video in a Jupyter notebook. If running outside a Jupyter notebook you can passmethod="link"to return a link instead.

The vidformer modules

vidformer is a highly modular suite of tools that work together:

-

vidformer-py: A Python 🐍 client for declarative video transformation

- Provides an easy-to-use library for symbolically representing transformed videos

- Acts as a client for a vidformer server

-

libvidformer: The core data-oriented declarative video editing library

- An embedded video processing execution engine with low-level interfaces

- Systems code, written in Rust 🦀

-

vidformer-igni: The vidformer server

- A multi-tenant scale-out server

- Designed for Video on Demand only

- Does not support full-video exports

- All video sources must be over the network, not local

- Enables live streaming and waiting on external dependencies for even lower time-to-playback latency

Client libraries in other languages: Writing a vidformer client library for other languages is simple. It’s a few hundred lines of code, and you just have to construct some JSON. Contributions or suggestions for other languages are welcome.

vidformer - Video Data Transformation Library

(lib)vidformer is a video transformation library. It handles the movement, control flow, and processing of video and conventional (non-video) data.

Quick links:

About

- It’s written in Rust 🦀

- So it does some fancy parallel processing and does so safely

- Uses the FFmpeg libav libraries for multimedia stuff

- So it should work with nearly every video file ever made

- Uses Apache OpenDAL for I/O

- So it can access videos in a bunch of storage services

- Implements filters using OpenCV

Building

This crate requires linking with FFmpeg, as detailed in the rusty_ffmpeg crate.

We currently target FFmpeg 7.0.

vidformer-py

vidformer-py is a Python 🐍 frontend for vidformer. It has an API compatibility layer with OpenCV cv2, as well as some supervision annotators. Our getting started guide explains how to use it.

Quick links:

- 📦 PyPI

- 📘 Documentation - vidformer-py

- 📘 Documentation - vidformer.cv2

- 📘 Documentation - vidformer.supervision

- 🧑💻 Source Code

Publish:

export FLIT_USERNAME='__token__' FLIT_PASSWORD='<token>'

flit publish

vidformer-igni

The vidformer server for the cloud.

Quick links:

Local Setup

See the install guide.

Development Setup

docker-compose -f docker-compose-db.yaml up

export 'IGNI_DB=postgres://igni:igni@localhost:5432/igni'

cargo run -- user add --name test --api-key test --permissions test

cargo run --release -- server --config igni.toml

Server Deployment

# From vidformer project root

docker build -t igni -f Dockerfile .

docker-compose -f vidformer-igni/docker-compose-prod.yaml up

For TLS certs:

docker-compose -f vidformer-igni/docker-compose-prod.yaml run --rm certbot certonly --webroot --webroot-path /var/www/certbot/ -d api.example.com -d cdn.example.com

Guest account setup (for colab notebook)

docker ps

docker exec -it <igni container> bash

vidformer-igni user add --name guest --permissions guest --api-key VF_GUEST

vidformer-igni user ls

# ToS video

vidformer-igni source add --user-id 98f6aa2a-e622-40bc-a0cd-e05f73f7e398 --name vf-sample-media/tos_720p.mp4 --stream-idx 0 --storage-service http --storage-config '{"endpoint":"https://f.dominik.win"}'

vidformer-igni source add --user-id 98f6aa2a-e622-40bc-a0cd-e05f73f7e398 --name vf-sample-media/bbb_720p.mp4 --stream-idx 0 --storage-service http --storage-config '{"endpoint":"https://f.dominik.win"}'

vidformer-igni source add --user-id 98f6aa2a-e622-40bc-a0cd-e05f73f7e398 --name vf-sample-media/tos_720p-yolov8x-seg-masks.mkv --stream-idx 0 --storage-service http --storage-config '{"endpoint":"https://f.dominik.win"}'

# Apollo 11 videos

for i in $(seq -f "%02g" 1 23); do

vidformer-igni source add --user-id 98f6aa2a-e622-40bc-a0cd-e05f73f7e398 --name "vf-sample-media/apollo-11-mission/Apollo 11 $i.mp4" --stream-idx 0 --storage-service http --storage-config '{"endpoint":"https://f.dominik.win"}'

done

Filters

OpenCV/cv2 Functions

Legend:

- ✅ - Support

- 🔸 - Support via OpenCV cv2

- ❌ - Not yet implemented

Vidformer-specific Functions

cv2.vidplay(video)- Play a VideoWriter, Spec, or SourceVideoWriter.spec()- Return the Spec of an output videoFrame.numpy()- Return the frame as a numpy arraycv2.setTo- The OpenCVMat.setTofunction (not in cv2)cv2.zeros- Create a black frame (equivalent tonumpy.zeros)

opencv

| Class | Status |

|---|---|

| VideoCapture | ✅ |

| VideoWriter | ✅ |

| VideoWriter_fourcc | ✅ |

| Function | Status |

|---|---|

| imread | ✅ |

| imwrite | ✅ |

opencv.imgproc

Drawing Functions:

| Function | Status |

|---|---|

| arrowedLine | ✅ |

| circle | ✅ |

| clipLine | 🔸 |

| drawContours | ✅ |

| drawMarker | ✅ |

| ellipse | ✅ |

| ellipse2Poly | 🔸 |

| fillConvexPoly | ✅ |

| fillPoly | ✅ |

| getFontScaleFromHeight | 🔸 |

| getTextSize | 🔸 |

| line | ✅ |

| polylines | ✅ |

| putText | ✅ |

| rectangle | ✅ |

opencv.core

| Function | Status |

|---|---|

| addWeighted | ✅ |

| copyMakeBorder | ✅ |

| flip | ✅ |

| hconcat | ✅ |

| resize | ✅ |

| rotate | ✅ |

| vconcat | ✅ |

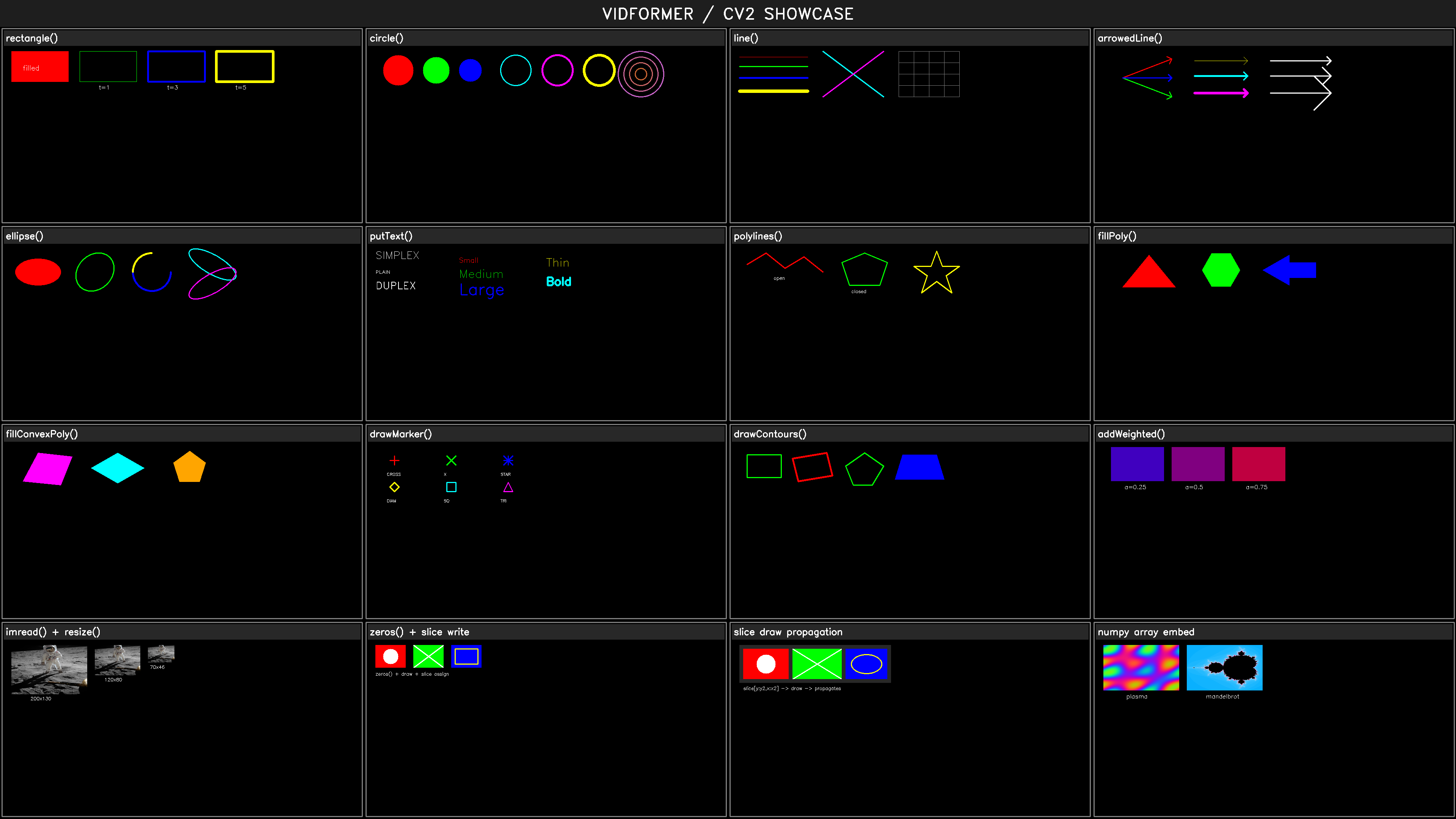

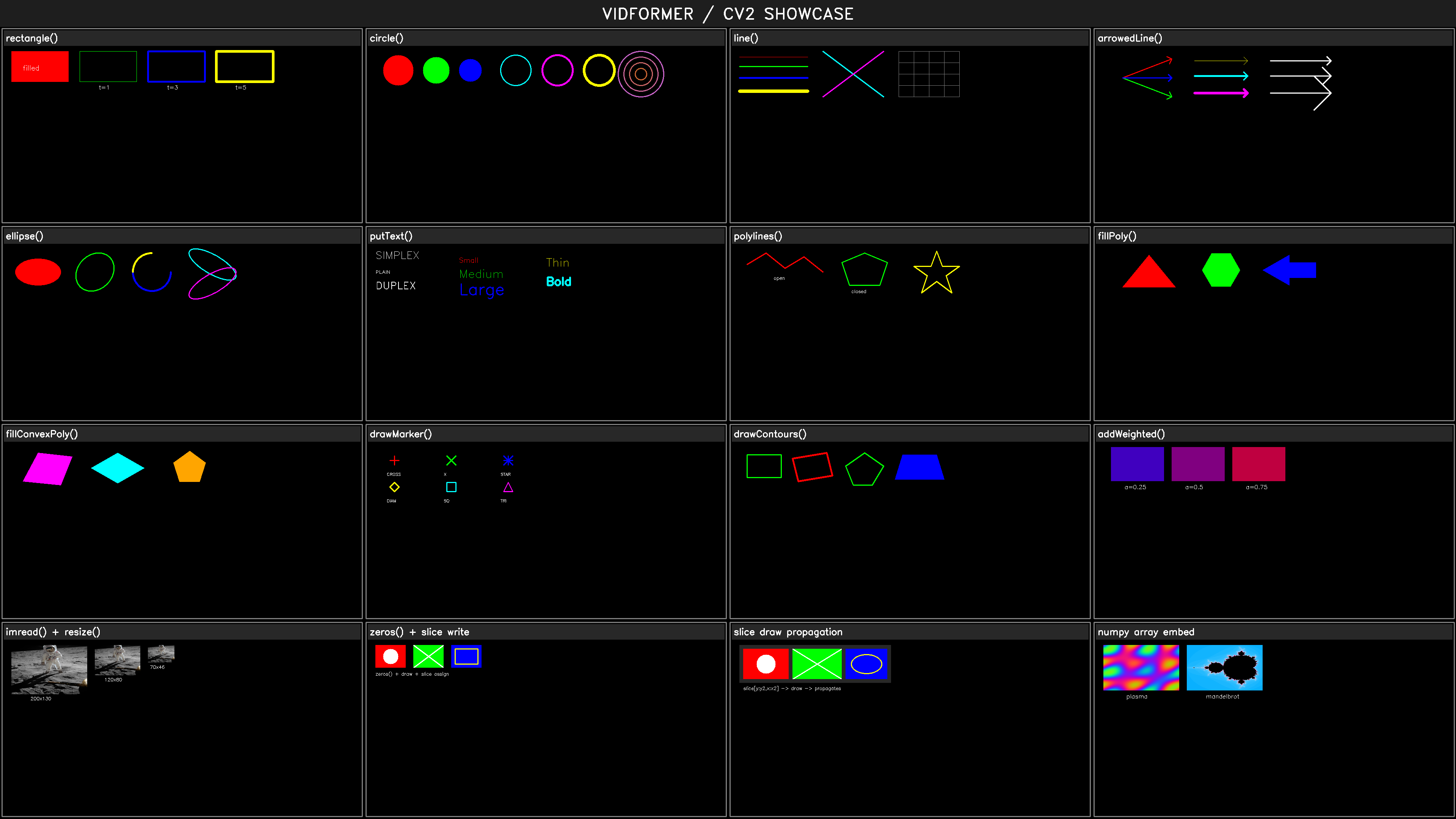

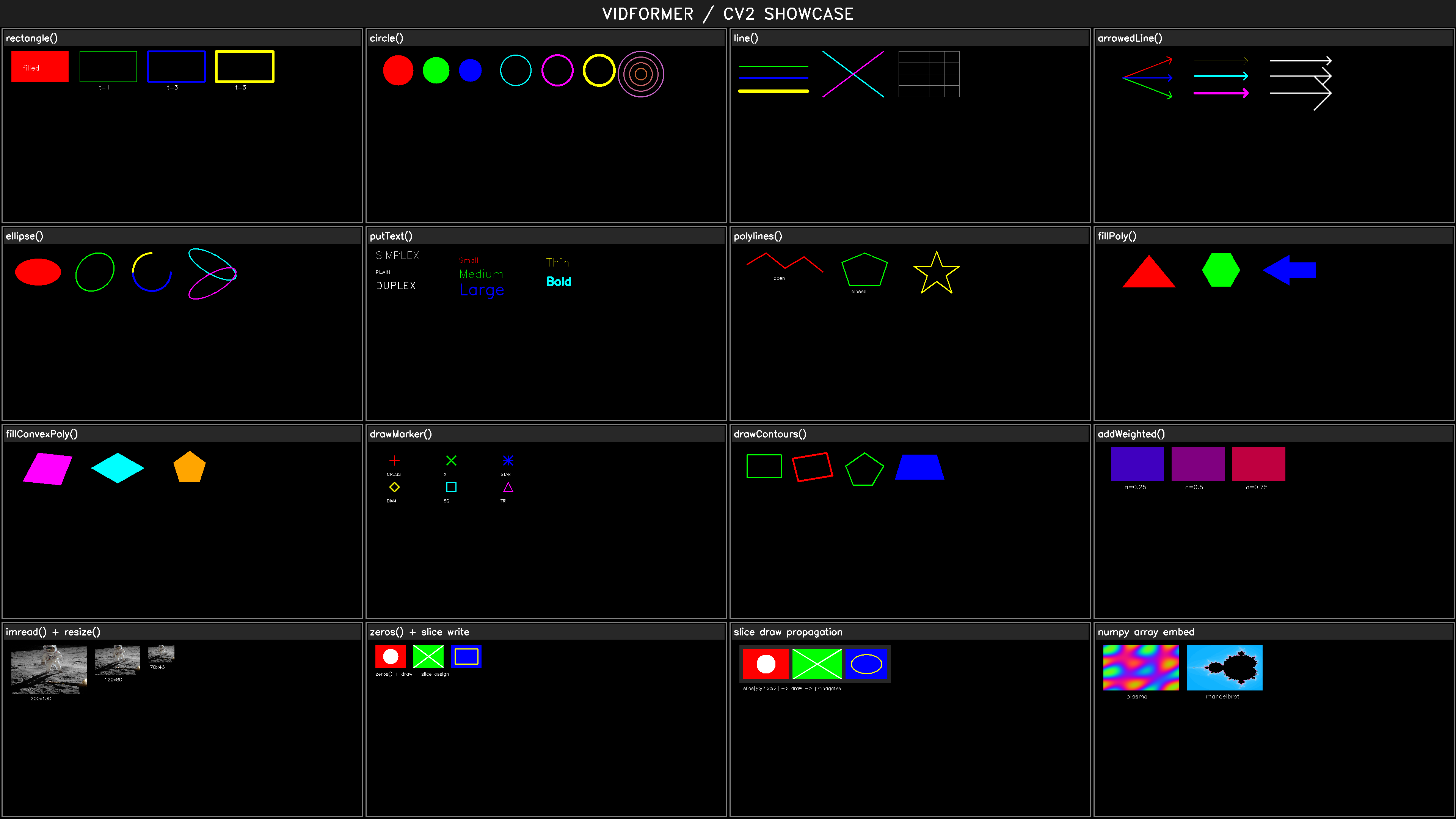

Output Comparison

Vidformer’s cv2 output compared to native OpenCV (source code):

Vidformer:

OpenCV:

Per-pixel Diff:

The differences mainly come from Vidformer using FFmpeg’s swscale for resize instead of OpenCV’s resize.

Hardware Acceleration

This page details how to compile vidformer with NVIDIA NVENC and similar hardware accelerated codecs. We assume Docker is running on a system with CUDA. Other codecs also work, see FFmpeg docs. Testing this with GitHub Actions is impossible, so it may be a tad outdated.

The container must be run with these arguments: --gpus all --runtime=nvidia -e NVIDIA_DRIVER_CAPABILITIES=all.

If using Dev Containers, these can be added to the devcontainer.json file under runArgs.

The scripts/deps_ffmpeg.sh needs to be patched to include --enable-ffnvcodec.

Then you can run this in the container:

# From project root delete old FFmpeg build

rm -rf ffmpeg

sudo apt update -y

sudo apt install build-essential yasm cmake libtool libc6 libc6-dev unzip wget libnuma1 libnuma-dev -y

# Install (see https://trac.ffmpeg.org/wiki/HWAccelIntro)

rm -rf nv-codec-headers

git clone https://git.videolan.org/git/ffmpeg/nv-codec-headers.git

cd nv-codec-headers

# NOTE: Depending on your driver, you may want to checkout an older version tag here

make

sudo make install

cd -

# Install cuda

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt update -y

sudo apt-get install -y nvidia-container-toolkit nvidia-cuda-toolkit

# Build ffmpeg

./scripts/deps_ffmpeg.sh

# Test it out

./ffmpeg/build/bin/ffmpeg -i myinputvid.mp4 -c:v h264_nvenc out.mp4 -y

Now you can recompile vidformer and use hardware accelerated codecs.

FAQ

What is vidformer NOT?

vidformer is strongly complementary to many tools, but is not:

- A conventional video editor (like Premiere Pro or Final Cut)

- A video database/VDBMS

- A natural language query interface for video

- A computer vision library (like OpenCV)

- A computer vision AI model (like CLIP or YOLO)

If you’re working on any of the latter four, vidformer may be useful for accelerating your visualization workflows.

What video formats does vidformer support?

In short, essentially everything. vidformer uses the FFmpeg/libav* libraries internally, so any media FFmpeg works with should work in vidformer as well. We support many container formats (e.g., mp4, mov) and codecs (e.g., H.264, VP8).

A full list of supported codecs enabled in a vidformer build can be found by running:

vidformer-cli codecs

Can I access remote videos on the internet?

Yes, vidformer uses Apache OpenDAL for I/O, so most common data/storage access protocols are supported. However, not all storage services are enabled in distributed binaries. We guarantee that HTTP, S3, and the local filesystem are always available.

How does vidformer compare to FFmpeg?

vidformer is far more expressive than the FFmpeg filter interface. Mainly, vidformer is designed for data-oriented work, so edits are created programmatically and can reference data. Also, vidformer enables serving result videos on demand.

vidformer uses the FFmpeg/libav* libraries internally, so any media FFmpeg works with should also work in vidformer.

How does vidformer compare to OpenCV/cv2?

vidformer orchestrates data movement, but does not implement image processing directly. Most use cases will still use OpenCV for this.